Best LLM for Chatbot: Which Models are Best for Building AI chatbot?

Well, like everything in life, it depends. We offer a customer support automation platform and spend a large amount of our time and resources in evaluating, benchmarking, and deploying the most optimal Generative AI models for our customers. In this article, we share our learnings and takeaways in evaluating the popular Large Language Models (LLMs), particularly in the domain of customer support automation: LLama 2, Mistral, GPT-4, and GPT-3.5. This includes general-purpose chatbots like ChatGPT, as well as customer service chatbots that are trained on a business's docs and data. We also evaluated the performance of these best Large Language Models across different providers such as OpenAI, Azure, and other emerging provider platforms.

Evaluation Criteria

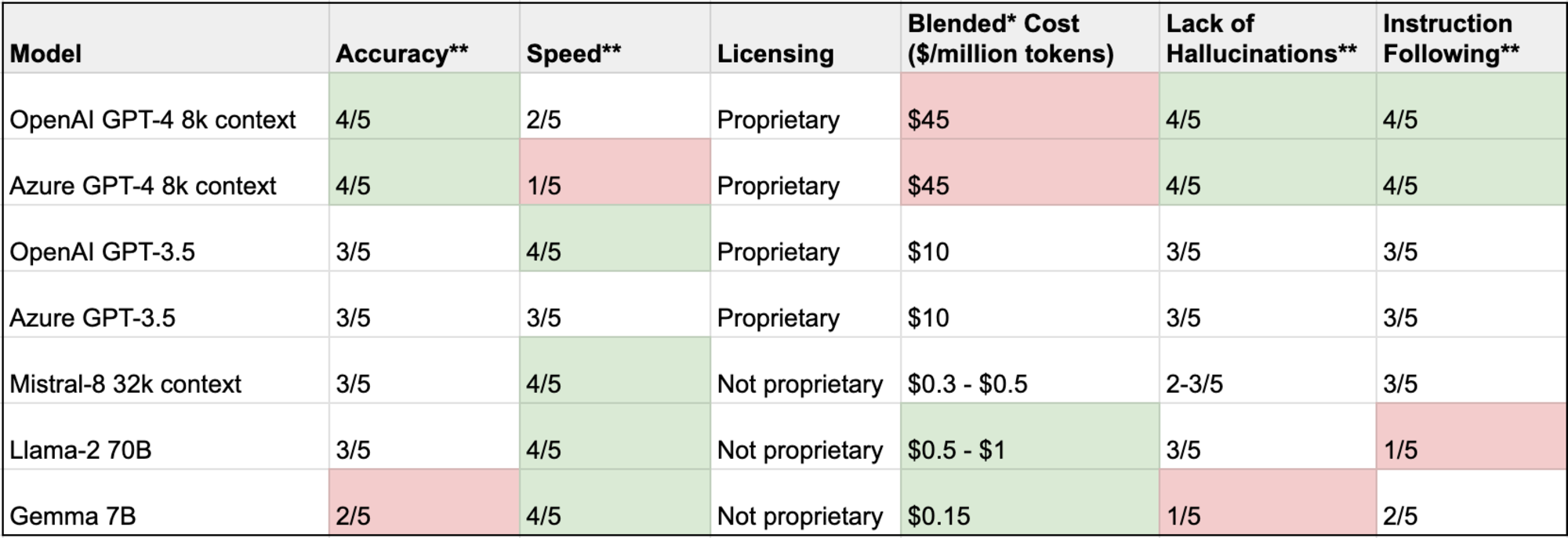

While there are several benchmarks, evaluation methods, and results available online when it comes to the out-of-the-box performance of popular LLMs, we wanted to evaluate specifically for the customer support domain. Natural language processing (NLP) plays a crucial role in this evaluation by enhancing the LLMs' ability to interpret user inputs, understand context, and generate accurate and contextually relevant responses. We prioritized certain dimensions: Accuracy, Speed, Proprietary, Cost, Lack of Hallucinations, and Instruction Following.

Accuracy

Accuracy and correctness of responses are the most impactful elements for us and our customers as we are not just building demo chatbots but actual products that take user feedback and are deployed in production. We value our customers’ brand and trust highly and ensure that only accurate answers without hallucinations are generated, especially in varying conversational contexts.

Most companies use AI chatbots along with a RAG (Retrieval-Augmented Generation) setup. It’s important that the results are only produced from AI tools using whitelisted information sources and reference data that have been fed into the RAG.

Speed

Our customers deploy our AI solutions in production as either AI chatbots, autonomous agents, or agent augmentation solutions and care about support KPIs and evaluation metrics such as first response times and total resolution times. As a result, quick and fast responses are critical for ensuring a good customer experience.

If you are building a real-time customer-facing AI chatbot, then speed matters. Smaller LLMs (less than 10B parameters) are noticeably faster and produce results in order of milliseconds. While larger LLMs take a few seconds. This difference really impacts the usability and customer experience of a chatbot. However, if you are processing a batch workload and are not expected to have real-time results, this evaluation criteria becomes moot.

Proprietary vs non-proprietary models

Non-proprietary and/or open-source LLMs offer the advantage of transparency, allowing developers and researchers to scrutinize, modify, and improve the model's code, which fosters innovation and community collaboration. On the other hand, proprietary LLMs, maintained by private entities, can ensure tighter control over security and intellectual property, potentially offering more stable and reliable solutions for commercial applications.

There are only two providers of proprietary models of GPT-4, OpenAI and Azure (surprisingly, their performance characteristics are not identical). Then, there are non-proprietary models like Llama (from the Meta AI team), Mistral, and Gemma with open weights and more permissive licensing. If you are using a hosted provider for these models, you can shop around and have more options available.

Cost

Depending on whether you use a proprietary model or not accessible and whether you are hosting yourself or using a provider, the costs and the costing dimensions may be different. For the former, you pay a per-token cost. If you are using a hosted provider (for proprietary or non-proprietary models), they will likely charge per token. Note that, given there are only two providers for the proprietary model, you are likely to pay much higher compared to hosted providers for non-proprietary models. For example, the costs for Mistral are 1/100th of GPT-4 (not to discount the fact that in our analysis, the latter performs better) and 1/10th of GPT-3.5. Alternatively, if you are hosting a non-proprietary model in your infrastructure, the costs include GPU costs and the engineering costs to manage the infrastructure.

Lack of Hallucinations

The percentage of times when the model's response was a hallucination. I'd like to distinguish hallucination from accuracy. A response can both contain accurate information and hallucination at the same time. Our criteria were pretty simple - any tangible piece of information should come from the shortlisted sources of information.

Instruction Following

Many times, LLMs need to follow instructions correctly. For instance, the LLM system sometimes might introduce a text/phrase that it was explicitly asked not to, etc. Most people use LLMs with RAG and want structured information (e.g. JSON, YAML, CSV) and it’s important to follow the instructions and return the asked structured format. So be careful when using different models. For example, Llama-2 is not instruction fine-tuned, while Llama-2 inst is instruction fine-tuned and is better at following instructions.

Evaluation Setup

Evaluation data set

We hand-crafted an evaluation data set based on our experience of automating customer support queries through chatbot and email bot. Our dataset contained queries that can be answered based on support FAQs and knowledge articles. It included data points from different industries and varying question complexity. We ran different models against this hand-crafted and high-quality evaluation data set.

Prompts

We kept the prompts the same across all these models. The prompts that were selected for the evaluation process have demonstrated tremendous success in automating a large volume of both simple and complex support queries.

Models and Providers

We evaluated the performance of different combinations of models and providers. We found scenarios where the same language model, such as GPT-4, across different providers (e.g. Azure and OpenAI), gave different results. This was crucial for assessing the performance of large language models (LLMs) to measure and compare their capabilities.

Large Language Models Evaluation Results

* Blended cost is the average of input and output token cost

** Scale of 1-5 where 5 is the highest and 1 is the lowest

Key Insights and Recommendations

The best AI model for a business serving clients with large ticket value

Who are you? Consider the below if you are an airline, a legal firm, or an enterprise B2B software business with a large ticket value (Annual Contract Value of $10K or more) and/or high legal risk. You cannot tolerate inaccuracies, hallucinations, or security incidents. Remember how an airline was held liable for misinformation given to a consumer by an AI chatbot on its website and had to give damages?

Our recommendation: We recommend going with either of the below options:

Azure GPT-4 offered and managed by a trusted customer support vendor, or

An in-house non-proprietary fine-tuned model offered by a trusted customer support vendor (like us!) that has strong safeguards in place to prevent inaccuracies and hallucinations, or

Azure GPT-4 if you have the engineering bandwidth to train, deploy, and manage your own ML infrastructure (we wouldn't take this responsibility lightly!). Note that we would still recommend against piping the queries directly to GPT-4, without a trusted safeguard engine, as you may be held liable for hallucinations.

Why? The risk-reward ratio of smaller LLMs is hard to justify and we would recommend larger LLMs or fine-tuned smaller LLMs. From a security perspective, you may prefer sending data to Microsoft Azure, instead of a smaller company, OpenAI. Even though GPT-4 is the slowest and most expensive model, you get the highest accuracy and lowest hallucinations that you need. You can similar performance as GPT-4 when a trusted customer support vendor fine-tunes non-proprietary models (such as Mistral) on your high-value data.

The right model for a small or medium-sized business with a low ticket value

Who are you? Consider the below recommendation if you are a small e-commerce business built on top of Shopify, or you are a gaming company with many free/freemium customers, or you have a strong PLG (Product Led Growth) motion with many free/freemium customers, or you are a low to mid-end SaaS business. You get the picture.

Our recommendation: We recommend going with the below option:

A non-proprietary model (e.g. Mistral or Gemma) offered by a trusted customer support vendor that has strong safeguards in place to prevent inaccuracies and hallucinations. We wouldn't recommend using this out-of-the-box without safeguards as you will run into more than 50% of responses resulting in hallucinations.

Why? High speed and low costs with reasonable accuracy are your ideal factors. You don't need to use the much slower and more expensive (10x) GPT-4 models. You can get reasonable accuracy with emerging non-proprietary models but with the right safeguards and hallucination detection models offered by trusted software vendors (like us!).

Hybrid

You do not fall in either of the two above buckets. In that case, the answer is likely a hybrid of the two recommendations above. We offer a free consultation (booking link) that helps you figure out the right model for your business.

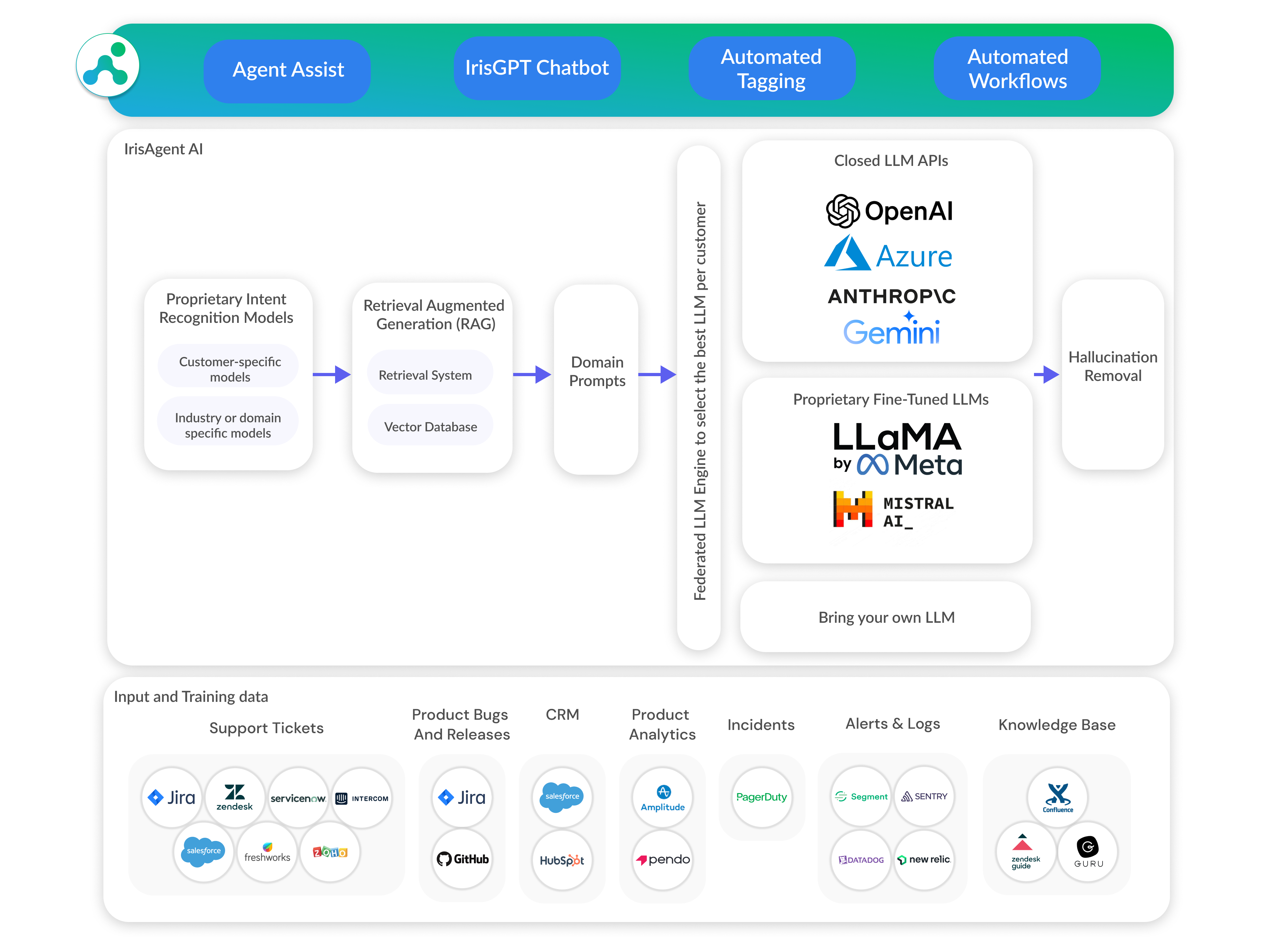

Our approach to GenAI chatbots

Our GenAI stack for building AI chatbots and ticket automation platforms includes multiple LLMs, a federation layer to select the best LLM, LLMs fine-tuned on customer-specific and domain-specific data, proprietary intent recognition models, RAG, domain-specific prompts, and hallucination detection and prevention. We also offer hosted model deployments in customers’ own premises for larger enterprises. We have found that smaller LLMs that have been fine-tuned on high-quality domain-specific data perform as well or even better than the more expensive and slower larger LLMs. Additionally, integrating search engines enhances the capabilities of AI chatbots by providing access to current events, links to sources, and real-time web results.

The LLM space in artificial intelligence is very exciting and fast-evolving. If you are on your own GenAI journey, evaluating different models, and training data, or looking for a trusted partner for your automation needs, we would love to chat with you!